Cilium: Next generation Networking & Security of CNCF with Golang, eBPF & Hubble

Cilium is an Open Source Next Generation networking & Security solution for Cloud Native fueled with the revolutionary & mindblowing mechanisms and adatabilities like eBPF, Hubble, Cluster Mesh, 7 layer Auth protection. It which started in 2015 as GitHub commit for providing security and observability for Network Connectivity between workloads, which is used by the big players like Google Cloud, Amazon AWS, Microsoft Azure, Alibaba Cloud, Adobe, Bell, Data Dogs and many more.

There are a lot of amazing mind-blowing mechanisms and adaptability which might catch up your mind as an engineer or even as a student, which I am gonna break in this blog which include:

eBPF for Dynamic insertion

CiliumEndpointSlice

Hubble

Load balancing

Cluster Mesh

Identity Based Security

API Aware Authorization

Installing Cilium

Before discussing about the functionalities and the mechanisms lets quickly install Cilium and it's basic command line interface.

Cilium CLI

Firstly we can download the Cilium CLI which gives us the power to manage, operate cilium and gives you a cluster wide and multi-cluster mode, a cross cluster view on everything. It will also be interacting Kubernetes API so we can do everything using kube control as well.

It is the way of installing Cilium where you can install into your machine and then you can simply deploy our DaemonSets, operator so this will run a Cilium agent mentioned on all of our nodes. Many auto resources would be installed while installing Cilium as cluster role, the service accounter, config maps, secrets will detect the best data path, mode of Kubernetes, GKE Native router.

cilium status

To view status of Cilium, DaemonSet, Deployment, container status and image version.

cilium connectivity test

It will deploy a couple of parts into my cluster including cilium test namespace, deploy a couple of pods and then will run a connectivity test. It will test pod to pod across node, services, with policy, without policy, it will test massgrading, it will test node to pod, pod to node and various different tests possible. So, if we got any issues than we can run the connectivity test.

k -n cilium-test get pods

To see the pods running in our cilium-test namespace with cilium.

k -n cilium-test get svc

To view the services running in the cilium-test namespace with cilium.

cilium bpf

To view all the commands we have that can be given to perform certain function with bpf in cilium.

cilium bpdf endpoint list

Here it will show the map of the eBPF inside the cilium, it's basically sue to store the data structure and data inside the eBPF, which will show a bunch of IP address, flags, mac_address for the dataset accounts in our databse.

Cilium Features

eBPF (Extended Berkely Packet Filterjointly)

eBPF which stands for Extended Berkely Packet Filterjointly, is runed by Isovalent and Facebook with collaboration with Google, Red Hat, Netflix, Cloudfare and many more, it does with the native execution speeds thus we can have any application or pods running in the users face and based on the events in the kernel where we can run custom pods. It is a mechanism for running small programs in a Virtual Machine embedded with the Linux kernel on a Linux host such as a Kubernetes node.

These are attached to the hooks which are triggered when some event occurs. This allow the behavior of kernel to be customized. This pre-defined hooks include network events, system calls, function entry and exit points, kernel trace-points and others.

eBPF started out on Linux side, have even ported to Windows thus becoming a generic programmign interface for operating system which enable us to run program, customize the operating system when certain events happen.

Impact on Kubernetes

eBPF changed the way tooling around Kubernetes, containers and dockers which used kernel technology, as with eBPF we can dynamically extend the kernel in a safer and efficient way which leverage Linux kernel as a center for innovation.

eBPF helped to observe all these different types of events, activities happening and thus we can use eBPF to observe absolutely everything happening across the system and hook into everything.

Applications of eBPF

eBPF have been used by Cilium, which we will be learning in a sort while but let's hop into how is it being used by some other tech giants.

Entire Network layer at Facebook including the DDos protection, firewall and load balancing is done with eBPF. Although eBPF is an entirely low level project, which mean we need something to tuck it in the application above it which abstract it away to make it useful. Thus eBPF can't be used as a direct interface we need something so it's not visible btu we still are using it on daily basis.

eBPF is also used by the TCP dump which uses earlier version as classic BPF which was the small subset of what eBPF have now become. If we do a filtering TCP dump which gets translated into BPF and it's the filter which define TCP dump displaying.

How Cilium use eBPF

Cilium uses eBPF which enables the dynamic insertion of powerful security, visibility and networking control logic into Linux kernel. eBPF provide high-performance networking, multi-cluster and multi-cloud capabilities, advanced load balancing transparent encryption, extension network security capabilities, transparent observability and much more.

eBPF for Cilium will be triggering programs based on attachment points so it can help in inspecting the traffic, it can monitor or perform bandwidth management once the network packets transfer. So it can help in observing for flows going through Network device or coming from processes and sockets.

Endpoints

Cilium is Not Kubernetes Specific although it's highly popular with folks using it with Kubernetes but Cilium is actually written in channel purpose way, so it can manage Endpoints too like a Kubernetes pod maybe for users btu it can also be an Entire Virtual Machine or a Linux c group or just a namespace.

So, Cilium can manage anything from a c group or a Network device/ Linux Network device as an Endpoint.

Endpoint is anything that has an IP address and can be addressable and can be addressed for the network. So, we can always validate exactly what is programmed in the eBPF data path, if you compare this to something like IP tables, or other implementations where we will be looking at hundreds or thousands of rules which give us a neat and structured way to validate what exactly is programmed into the data path.

There are some commands we can perform with Cilium for Endpoints:

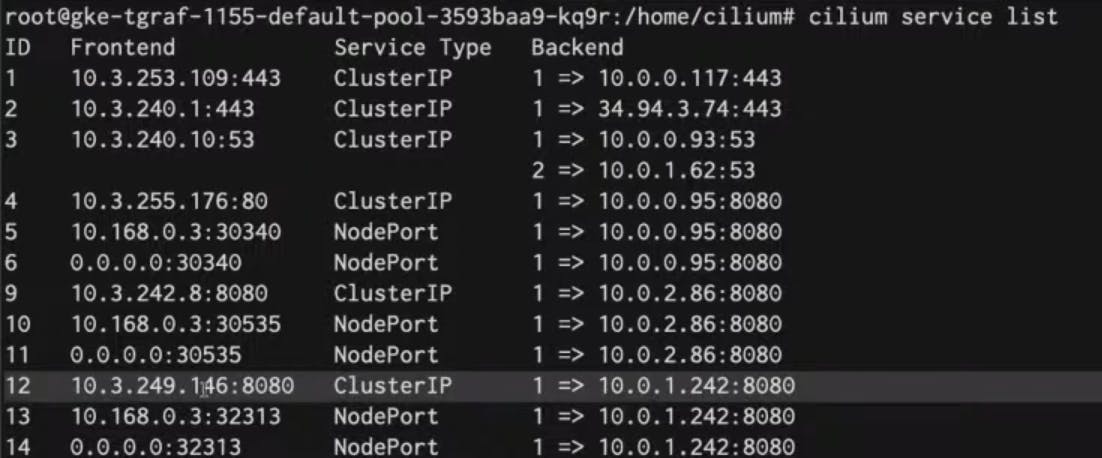

This will give the list of all the services that cilium implements.

cilium bpf lb list

Here we can go one level deeper and actually look at the load balancing layer, the kernel view and will show what is stored in the kernel.

Hubble

Developers often find it difficulty while creating network policies and specifically cilium network policy need to learn what is going on inside our namespace s and our workloads which is solved as a solution by Hubble.

Hubble is our distributed observability, monitoring and tracing tool to inspect flows inside our Kubernetes clusters and kind of figure out what is going on and troubleshoot connectivity.

Hubble provides three parts as:

- Hubble UI

It shows the dependency maps for our services, shows flows which can further be filtered to learn and inspect them more into a further deeper layer based on a specific pod and the kind of traffic entering and leaving the pod. It also provides an ability to inspect the current network policies.

- Hubble CLI

The Hubble CLI provides a detailed flow with detailed extensive filtering within the traffic and output the flow in json.

- Hubble Metrics

Hubble Metrics provides a built in metrics for monitoring operations and applications, and see the data using Grafana Prometheus.

So, now here we will be sharing some of the command lines which can make a smooth flow in our Cilium Cluster.

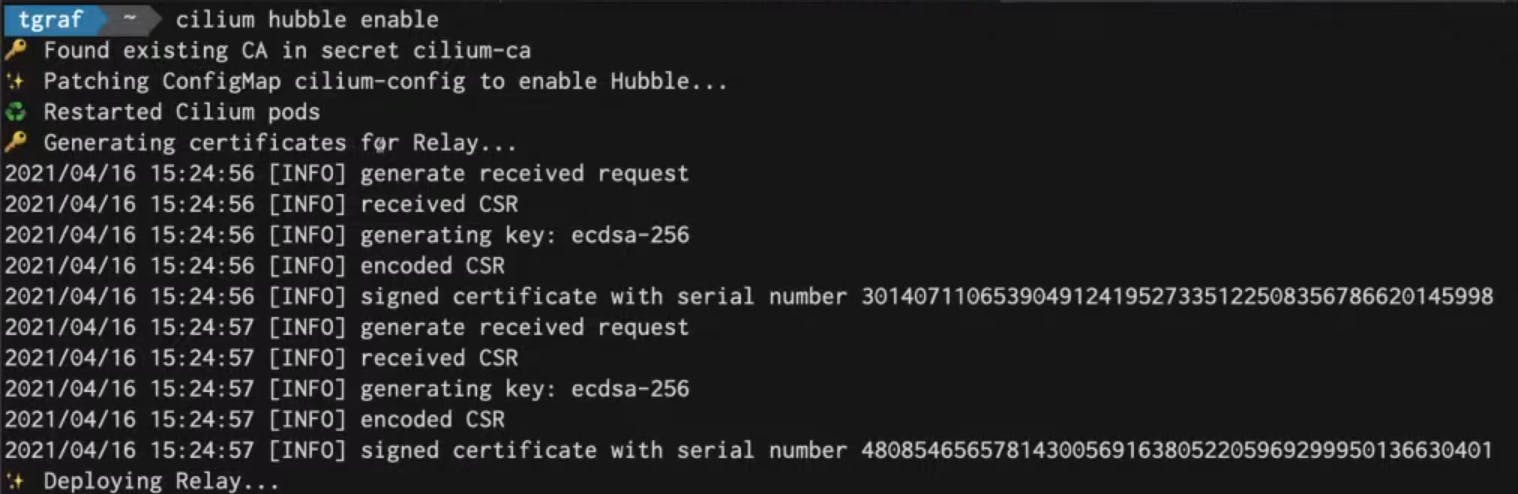

It will enable Hubble feature in cilium which will generate certificates for Relay and then will deploy Relay.

Relay service is basically a distributed TCP dump so it's an API that gives you a flow trace and capability for the entire cluster even with thousands of nodes and can query the entire cluster from Network visibility side.

Here we will see the status of Cilium showing all the deployments, daemon sets, containers, image versions and Hubble status as well running in Cilium.

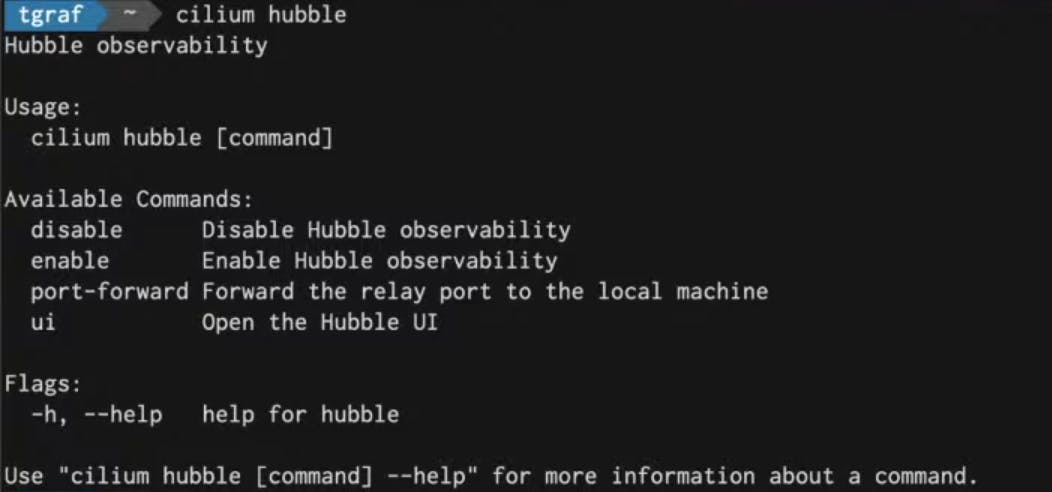

To view all the commands possible with the hubble command in Cilium to be holded. Soon as we write it we will see various commands like disable, enable, ui and port-forwarding.

cilium hubble port-forward

Let's take an example of port-forwarding feature to be used in the command of hubble with Cilium.

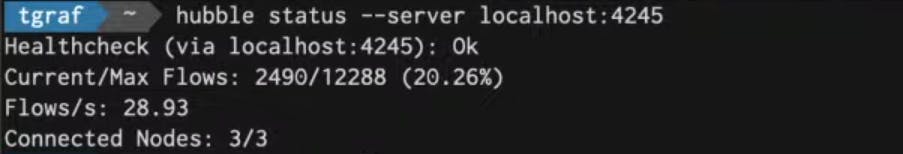

It will give Health Checks, current flow and maximum flows possible, number of flows per second where the flows are actually the traffic that we are receiving like each Network connection is one flow.

hubble observe --compact --server localhost:4225

Now we can see all the things across the Network across the cluster having all the connections which can further be divided and seperatly searched for specific parts like for kube-dns part. Here we will get connectivities to all the Network flows from kube-dns pod and now we can further go deep and debug as according to our need. Thus Troubleshooting would be absolutely easy and much efficiently done which is all done by eBPF.

This will deploy hubble UI which can be viewed in the pods section. It will now open up the browser with the list of namespaces as:

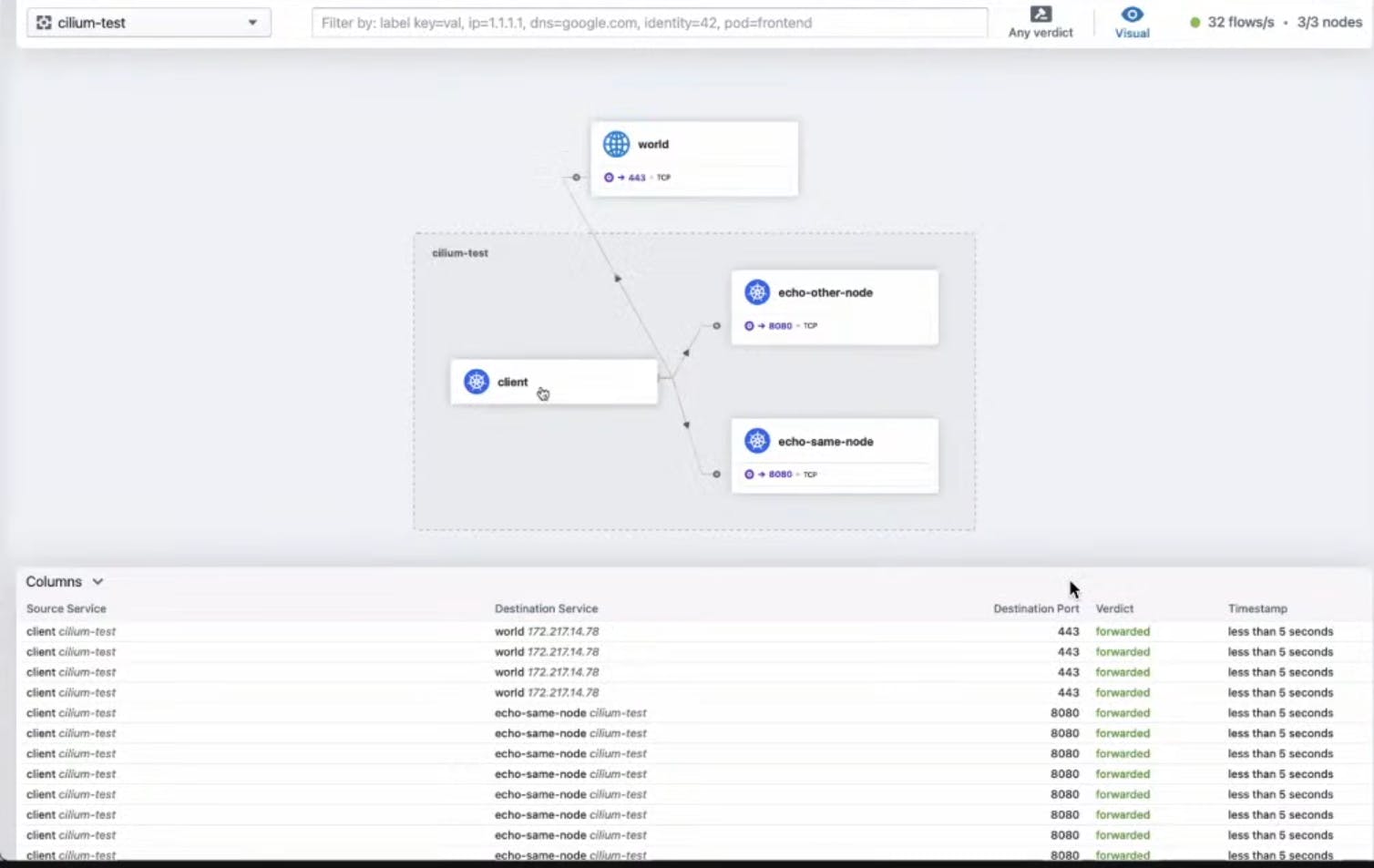

On choosing any namespaces amongst these, let's say cilium-test then we will be

So here we will be able to see the various features of the services and deep information about them like Source Service, Destination Services, Destination Port, Verdicts, Timestamps, etc.

Load Balancing

Cilium provide type load balancing in Kubernetes, Cilium has BGP service IP, earlier cilium could have provided Node port natively or load balancers but it was still need to run with metal lb to announce the Kubernetes Service IP to outside world but with newer version with BGP.

Cilium also supports Maglev, which is a load balancing technique developed and sued by Google. Maglev is know n for it's scalability and ability to handle a large number of connections. It allows for load balancing between nodes in a way that enables services or connections to take different routes, providing flexibility and robustness in large-scale infrastructure application.

So we can replace metal lb and no longer need to run lb or bird yourself. We can also replace the cloud provider lb, all we need to do is to get the network packets to a node where Cilium is running like if we are running on AWS then we need to get the packets delivered to an EC2 instance so that Cilium can pick up the traffic. So, we need to get the network packets that we need to be load balanced to a machine or where Cilium can run on top.

Cluster Mesh

Cluster Mesh with Cilium us ability to connect multiple Kubernetes clusters together, it doesn't matter if it's on the same cloud provider, on-premise or on different providers, it doesn't matter and provide proper connectivity between those clusters and allowed the required traffic through our firewalls across the clusters and your different nodes and pod IP siders in each cluster.

When we meets these required it meant that we can expose Backend running in different clusters using a shared global service which means we can create availability for our services across clusters.

Here in the above image we had frontend deployment and backend deployment where backend deployments are exposed as a global's backend service using a global backend service.

It means that by default the backends in each cluster are exposed to each other cluster as well. When you access that service then you will get responses from both clusters and the endpoints in both clusters. It also means when a backend in cluster2 is being removed then the endpoints for that backend service will be removed in all clusters and traffic will be forwarded to the remaining backend in the first cluster.

It creates a Shared service cluster and to expose a vault's service for the secret we may shared services clusters to other clusters. It means that let's say we shared services environment, a namespaces, services, then backends in that namespace and for each cluster needed to access the shared service environments then you will create same namespaces and services will then learn cluster mesh, the endpoints and will forward traffic for those clusters to the shared services in the cluster.

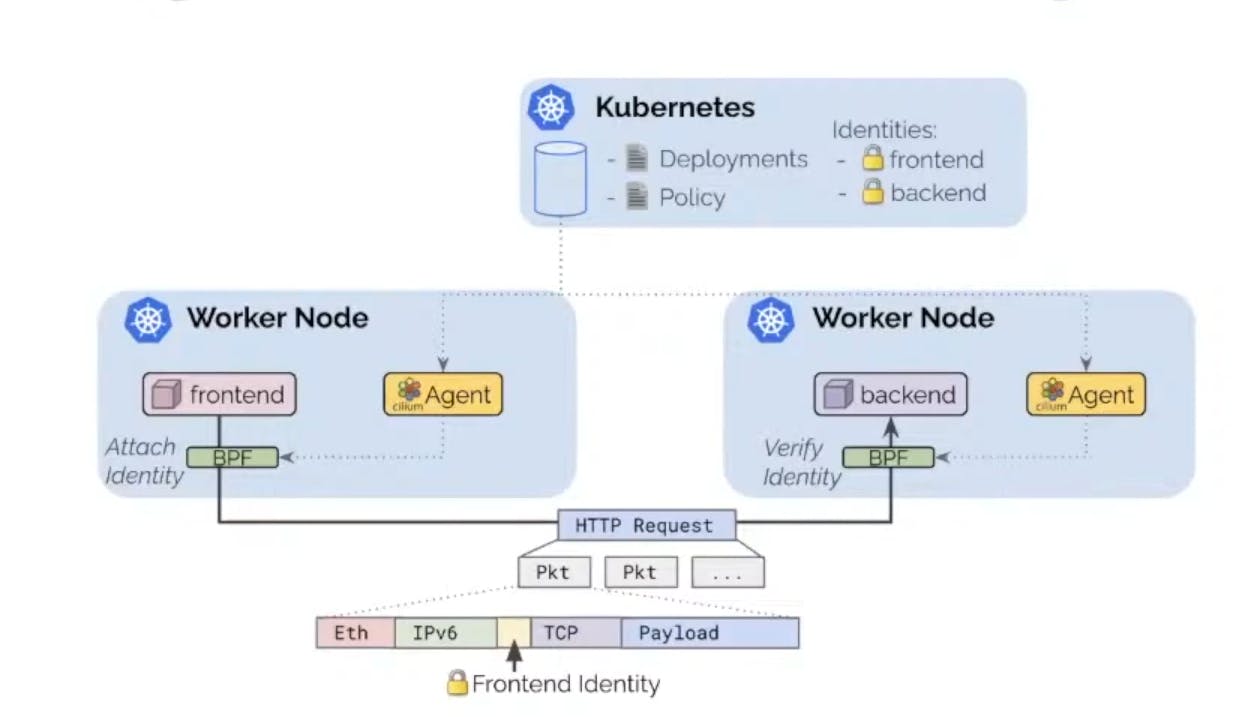

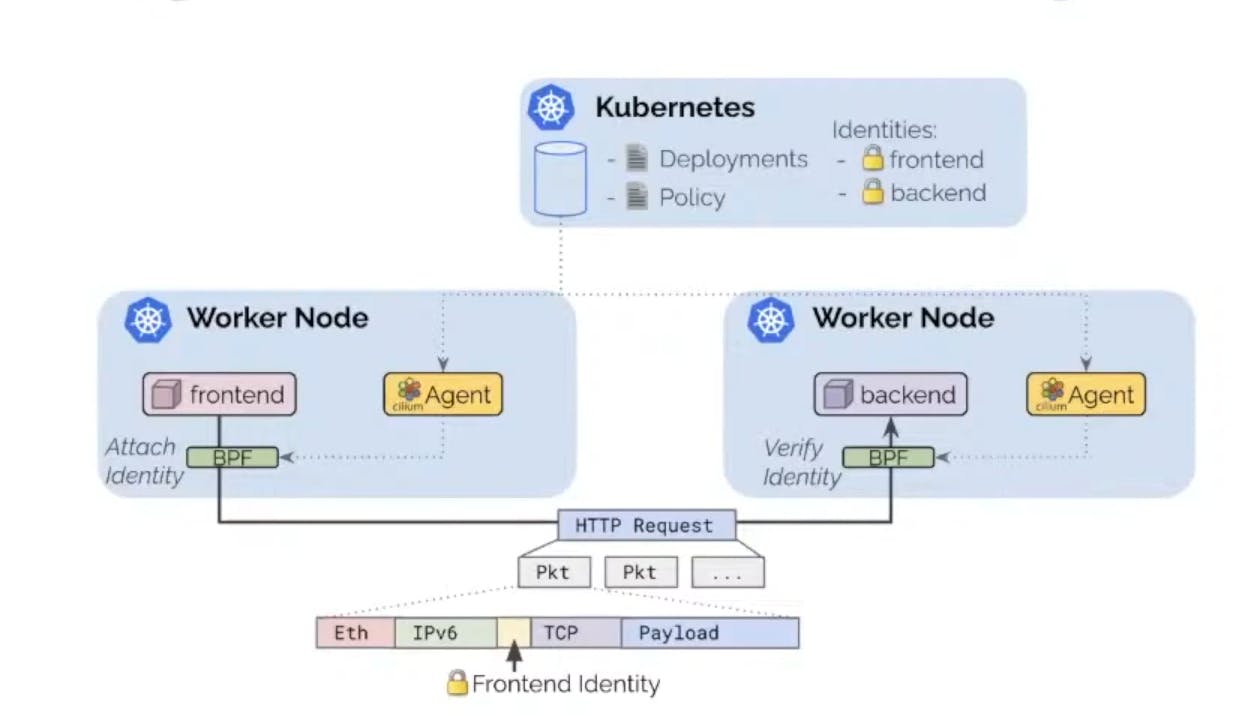

Identity Based Security

Cilium provides Identity based Security with both Kubernetes and Cilium Network policies, any port we deploy in Kubernetes will have some kind of label combination. Based on the unique set of labels cilium will create a unique identity. Using that Identity Cilium is able to attach that identity using BPF to flows from, let's say a frontend to a backend.

That identity will be encapsulated in the actual packet and Cilium will be able to verify that identity when the traffic arrives on backend and make a decision using BPF on it. So it can allow or deny traffic and other different things based on the conditions and identity.

API Aware Authorization

Cilium is API Aware so besides the general layer 4 filtering layer and capabilities, Cilium provides Layer 7 filtering capabilities for several filtering capabilities for certain application so we are able to inspect HTTP traffic, we are able to filter on specific API calls.

apiVersion: "cilium.io/v2"

kind: CiliumNetworkPolicy

[...]

specs:

- endpointSelector:

match Labels:

app: cassandra

ingress:

- toPorts:

- port: "9042"

protocol: TCP

l7proto: cassandra

l7:

- query_actions: "select"

query_table: "myTable"

Here in this example we have taken a Cassandra example where we configured kind:CiliumNetworkpolicy for any labels matching label app: cassandra and only allowing the port:9042 and protocol:TCP and machien layer 7 protocol as l7proto:cassandra which only allowed a query_actions:select and query_table:myTable .

Networking & Servicing in Cilium

Networking

In terms of Kubernetes, we need to provide connecting in terms of providing pods with Virtual Network interfaces, assigning IPs, provide internode connectivity using overlay or internode direct routing.

Most of the time a Kubernetes Networking or CNI solution relies on some kind of IP tables or IPVs implementations using kube-proxy and therefore allows services to keep track of pods as an endpoints or updating IP tables when pods appear and are being removed.

Cilium approach for Networking

Cilium rpovides it difficulty as it uses eBPF for all that connectivity so Cilium runs an agent on each using overlays as default or direct routing or some kind of hybrid solution in Cloud provider in environment to connect your nodes in cluster together.

Data plane is another eBPF based data plane which provides a lot of opportunities at scale, so can replace kube-proxy as an eBPF powered service to better perform at scale for updating our services.

Servicing

Services are used to give a single point of entry for accessing certain application or certain collection of pods.

Let' say we take app1 and app2 to connect and since both of these are ephemiral which is associated with ephemeral ports which are the temporary ports which are dynamically assigned by operating system for client application when they initiate a connection to server.

So we have app1 and app2 as their IP as ephermal and we don't want to connect want to connect an IP instead we use a cluster IP. The Limitation is at high scale as a service type cluster IP in traditional IPVs or IP tables implementation means that everytime a endpoint, service or both are being created or endpoints are being added or removed which means IP tables need to recompleted and re-evaluated, this changes to give services and not atomic.

Cilium approach on Servicing

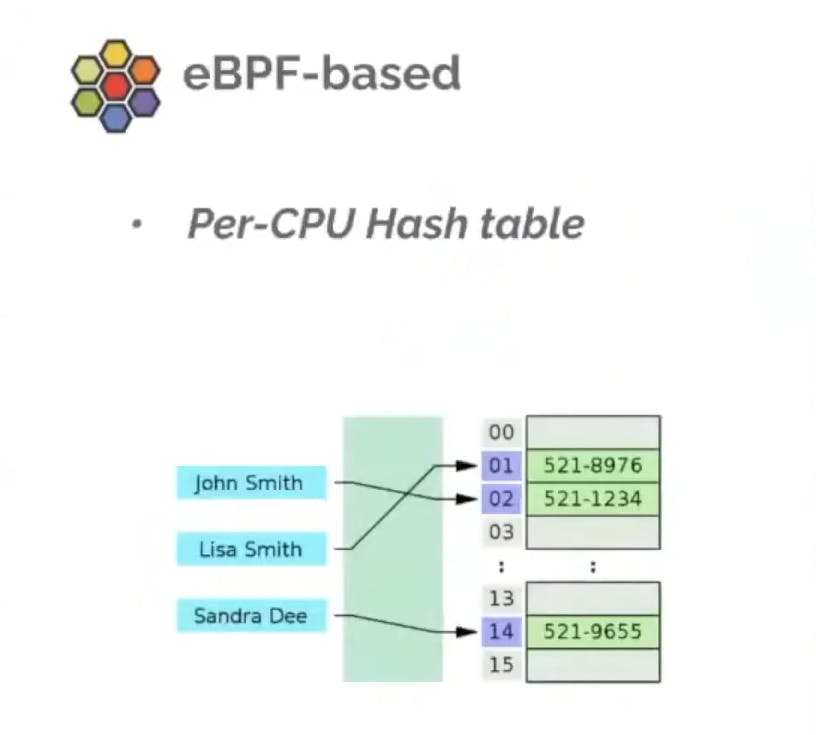

Using Cilium however we implement an eBPF based per CPU hash table implementation which means that we create BPF maps where changes are atomic.

For example if an endpoints changes or scaled down then those changes are atomic and we don't need to reload the BPF map just to change an empty in a BPF map.

In all implementation using IP tables which is a linear list and rules have to be replaced as a whole. Series and representation of services present on each node on each cluster it becomes at huge scale and becomes worse, number of services and number of pods, number of nodes, if those objects growing our Kubernetes cluster then at some point we will hit the issue when we try to update a certain entity in our Kubernetes Cluster and takes a long time to get IP data tables to this linear non-atomic updates using IP tables.

Which Big Players using Cilium?

Now, since you have understood some of the mechanisms and the architecture of cilium it would be quite an interesting topic to read about the big players in the domain who have been using it form quite a long time and for what purpose they have chosen it to be settled up for their system infrastructure.

- AWS

Isovalent partner AWS has just announced the availability of EKS Anywhere (EKS-A) to manage on-premises Kubernetes clusters. As part of this, AWS picked Cilium as the built-in default for networking and security. So, as you create your first EKS Anywhere (EKS-A) cluster, you will automatically have Cilium installed and benefit from the powers of eBPF.

AWS joins other cloud providers in picking Cilium as the networking and security layer. Managed Kubernetes offerings from Google Cloud, Alibaba, DigitalOcean, and several smaller platforms already leverage Cilium. With this latest announcement, three out of the big four cloud providers are now standardizing on Cilium for their cloud native networking and security needs.

- Google Kubernetes Engine

Google clearly has incredible technical chops and could have just built their dataplane directly on eBPF, instead, the GKE team has decided to leverage Cilium and contribute back. This is of course a huge honor for everybody who has contributed to Cilium over the years and shows Google's commitment to open collaboration.

Collaboration helped in auto-detection of EndpointSlices support, ability to join IPv6 NDP multicast groups and support for IPv6 neighbor discovery for pod IPs, Geneve encapsulation bugfixes, and optimizations to the socket cookie-based load-balancing.

- Azure Kubernetes Service

The new dataplane comes out of a collaboration of Microsoft and Isovalent and combines Cilium with the existing control plane of Azure CNI to bring a high-performance eBPF-based dataplane with extensive security and observability capabilities to Azure Kubernetes Service (AKS).

Users of Cilium benefit from the new and improved Azure CNI control plane with improved IP Address Management (IPAM) and even better integration into Azure Kubernetes Service (AKS). Users of AKS benefit from the full feature set of Cilium.

- Alibaba Cloud Service

Alibaba Cloud use Cilium as the BPF agent on nodes to configure the BPF rules for pod ENIs. By using Cilium and eBPF Alibaba Cloud performance is improved significantly by 32% compared to when iptables is used and 62% compared to IPVS mode.

Through eBPF programming, there is almost no performance loss when the number of Services is increased to 5000. However, when the number of services is increased to 5000, the performance loss in iptables mode is 61%.

- Data Dog

Datadog runs 10s of clusters with 10,000+ of nodes and 100,000+ pods across multiple cloud providers. They began to reach a point where traditional networking tools were not up for the job anymore. iptables, IPVS, and cloud specific CNIs were not designed to power Datadog’s data plane needs.

Datadog inspired by the considerations for Cilium by the folks and developers at KuebCon + CloudNativeCon, turned to Cilium as their CNI and kube-proxy replacement to take advantage of the power of eBPF and provide a consistent networking experience for their users across any cloud. They also implemented Cilium Network Policies to meet certifications and run in regulated environments. With Cilium, Datadog is now able to scale up to 10,000,000,000,000+ data points per day across more than 18,500 customers. They are able to run their network at scale and keep their customers’ data secure.

Datadog has started using Envoy for more use cases and is very interested in the sidecarless approach developed in the context of Cilium Service Mesh. They are also looking outside the cluster and considering using eBPF to create a smarter and more efficient network edge in the future. Using Cilium L4LB XDP to create their own load balancers rather than relying upon a cloud provider would allow them to provide a consistent experience to end users.

It is a really interesting case study of how Cilium helped Data Dog which you can find out at https://www.cncf.io/case-studies/datadog/

Golang implementation in Cilium

So here, I will be telling some basic knowledge how the Cilium use Golang in it's structure and infrastructure:

eBPF Code Generation:

- Cilium heavily utilizes eBPF for its networking and security functionalities. While eBPF programs are traditionally written in C, Cilium generates eBPF bytecode dynamically during runtime. Golang is instrumental in this process, providing the flexibility to generate, compile, and load eBPF programs on the fly.

BPF CO-RE Support:

- With recent advancements, Golang introduced support for BPF CO-RE (Compile Once - Run Everywhere). This allows Cilium to compile eBPF programs into a platform-independent format, enhancing the portability of the eBPF bytecode across various kernel versions and architectures.

Goroutines for Concurrent Operations:

- Cilium leverages Goroutines, which are lightweight concurrent threads in Golang, to handle multiple tasks concurrently. In the context of Cilium, this is crucial for efficiently managing networking and security tasks across a large number of microservices. Goroutines contribute to the scalability and responsiveness of Cilium's runtime.

Golang for API and CLI Development:

- The control plane and management components of Cilium, including its API server and command-line interface (CLI), are often implemented in Golang. This allows for consistent and reliable interactions with Cilium's features, providing a user-friendly interface for administrators and developers.

Community Collaboration:

- Golang's simplicity and readability make it an accessible language for a broad developer community. The Cilium project benefits from this by attracting contributors who can easily understand and work with the Golang codebase. The use of Golang promotes collaboration and ensures a diverse set of contributors, which is essential for the vitality of an open-source project.

Testing and Tooling:

- Golang has a strong emphasis on testing, and Cilium takes advantage of this by incorporating comprehensive testing methodologies. Automated testing tools and frameworks in Golang enable the Cilium project to maintain code quality, catch bugs early, and ensure robustness in its networking and security implementations.

Golang's Standard Library:

- The standard library of Golang provides a rich set of functionalities that Cilium leverages for various tasks, such as HTTP handling, concurrency management, and encryption. This reduces the need for external dependencies and ensures a consistent and reliable foundation for Cilium's functionalities.

Hope you get to learn about the Cilium and the different tools and services it's been using to improve it's infrastructure and provide it's users as much features and benefits as possible. Soon I will be releasing more insights about the Cilium infrastructure and many other CNCF projects.

If you like my Article then please react to it and connect with me on Twitter if you are also a tech enthusiast. I would love to collaborate with people and share the experience of tech😄😄.

My Twitter Profile: